HAIP异常,导致RAC节点无法启动的解决方案

本站文章除注明转载外,均为本站原创: 转载自love wife love life —Roger的Oracle/MySQL/PostgreSQL数据恢复博客

本文链接地址: HAIP异常,导致RAC节点无法启动的解决方案

一个网友咨询一个问题,他的11.2.0.2 RAC(for Aix),没有安装任何patch或PSU。其中一个节点重启之后无法正常启动,查看ocssd日志如下:

|

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 26 27 28 |

2014-08-09 14:21:46.094: [ CSSD][5414]clssnmSendingThread: sent 4 join msgs to all nodes 2014-08-09 14:21:46.421: [ CSSD][4900]clssgmWaitOnEventValue: after CmInfo State val 3, eval 1 waited 0 2014-08-09 14:21:47.042: [ CSSD][4129]clssnmvDHBValidateNCopy: node 1, rac01, has a disk HB, but no network HB, DHB has rcfg 217016033, wrtcnt, 255958157, LATS 1518247992, lastSeqNo 255958154, uniqueness 1406064021, timestamp 1407565306/1501758072 2014-08-09 14:21:47.051: [ CSSD][3358]clssnmvDHBValidateNCopy: node 1, rac01, has a disk HB, but no network HB, DHB has rcfg 217016033, wrtcnt, 255958158, LATS 1518248002, lastSeqNo 255958155, uniqueness 1406064021, timestamp 1407565306/1501758190 2014-08-09 14:21:47.421: [ CSSD][4900]clssgmWaitOnEventValue: after CmInfo State val 3, eval 1 waited 0 2014-08-09 14:21:48.042: [ CSSD][4129]clssnmvDHBValidateNCopy: node 1, rac01, has a disk HB, but no network HB, DHB has rcfg 217016033, wrtcnt, 255958160, LATS 1518248993, lastSeqNo 255958157, uniqueness 1406064021, timestamp 1407565307/1501759080 2014-08-09 14:21:48.052: [ CSSD][3358]clssnmvDHBValidateNCopy: node 1, rac01, has a disk HB, but no network HB, DHB has rcfg 217016033, wrtcnt, 255958161, LATS 1518249002, lastSeqNo 255958158, uniqueness 1406064021, timestamp 1407565307/1501759191 2014-08-09 14:21:48.421: [ CSSD][4900]clssgmWaitOnEventValue: after CmInfo State val 3, eval 1 waited 0 2014-08-09 14:21:49.043: [ CSSD][4129]clssnmvDHBValidateNCopy: node 1, rac01, has a disk HB, but no network HB, DHB has rcfg 217016033, wrtcnt, 255958163, LATS 1518249993, lastSeqNo 255958160, uniqueness 1406064021, timestamp 1407565308/1501760082 2014-08-09 14:21:49.056: [ CSSD][3358]clssnmvDHBValidateNCopy: node 1, rac01, has a disk HB, but no network HB, DHB has rcfg 217016033, wrtcnt, 255958164, LATS 1518250007, lastSeqNo 255958161, uniqueness 1406064021, timestamp 1407565308/1501760193 2014-08-09 14:21:49.421: [ CSSD][4900]clssgmWaitOnEventValue: after CmInfo State val 3, eval 1 waited 0 2014-08-09 14:21:50.044: [ CSSD][4129]clssnmvDHBValidateNCopy: node 1, rac01, has a disk HB, but no network HB, DHB has rcfg 217016033, wrtcnt, 255958166, LATS 1518250994, lastSeqNo 255958163, uniqueness 1406064021, timestamp 1407565309/1501761090 2014-08-09 14:21:50.057: [ CSSD][3358]clssnmvDHBValidateNCopy: node 1, rac01, has a disk HB, but no network HB, DHB has rcfg 217016033, wrtcnt, 255958167, LATS 1518251007, lastSeqNo 255958164, uniqueness 1406064021, timestamp 1407565309/1501761195 2014-08-09 14:21:50.421: [ CSSD][4900]clssgmWaitOnEventValue: after CmInfo State val 3, eval 1 waited 0 2014-08-09 14:21:51.046: [ CSSD][4129]clssnmvDHBValidateNCopy: node 1, rac01, has a disk HB, but no network HB, DHB has rcfg 217016033, wrtcnt, 255958169, LATS 1518251996, lastSeqNo 255958166, uniqueness 1406064021, timestamp 1407565310/1501762100 2014-08-09 14:21:51.057: [ CSSD][3358]clssnmvDHBValidateNCopy: node 1, rac01, has a disk HB, but no network HB, DHB has rcfg 217016033, wrtcnt, 255958170, LATS 1518252008, lastSeqNo 255958167, uniqueness 1406064021, timestamp 1407565310/1501762205 2014-08-09 14:21:51.102: [ CSSD][5414]clssnmSendingThread: sending join msg to all nodes 2014-08-09 14:21:51.102: [ CSSD][5414]clssnmSendingThread: sent 5 join msgs to all nodes 2014-08-09 14:21:51.421: [ CSSD][4900]clssgmWaitOnEventValue: after CmInfo State val 3, eval 1 waited 0 2014-08-09 14:21:52.050: [ CSSD][4129]clssnmvDHBValidateNCopy: node 1, rac01, has a disk HB, but no network HB, DHB has rcfg 217016033, wrtcnt, 255958172, LATS 1518253000, lastSeqNo 255958169, uniqueness 1406064021, timestamp 1407565311/1501763110 2014-08-09 14:21:52.058: [ CSSD][3358]clssnmvDHBValidateNCopy: node 1, rac01, has a disk HB, but no network HB, DHB has rcfg 217016033, wrtcnt, 255958173, LATS 1518253008, lastSeqNo 255958170, uniqueness 1406064021, timestamp 1407565311/1501763230 2014-08-09 14:21:52.089: [ CSSD][5671]clssnmRcfgMgrThread: Local Join 2014-08-09 14:21:52.089: [ CSSD][5671]clssnmLocalJoinEvent: begin on node(2), waittime 193000 2014-08-09 14:21:52.089: [ CSSD][5671]clssnmLocalJoinEvent: set curtime (1518253039) for my node 2014-08-09 14:21:52.089: [ CSSD][5671]clssnmLocalJoinEvent: scanning 32 nodes 2014-08-09 14:21:52.089: [ CSSD][5671]clssnmLocalJoinEvent: Node rac01, number 1, is in an existing cluster with disk state 3 2014-08-09 14:21:52.090: [ CSSD][5671]clssnmLocalJoinEvent: takeover aborted due to cluster member node found on disk 2014-08-09 14:21:52.431: [ CSSD][4900]clssgmWaitOnEventValue: after CmInfo State val 3, eval 1 waited 0 |

从上面的信息,很容易给人感觉是心跳的问题。这么理解也不错,只是这里的心跳不是指的我们说理解的传统的心跳网络。我让他在crs正常的一个节点查询如下信息,我们就知道原因了,如下:

|

1 2 3 4 5 |

SQL> select name,ip_address from v$cluster_interconnects; NAME IP_ADDRESS --------------- ---------------- en0 169.254.116.242 |

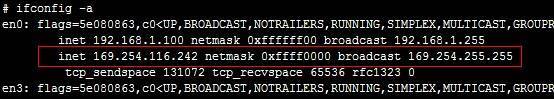

大家可以看到,这里心跳IP为什么是169网段呢?很明显跟我们的/etc/hosts设置不匹配啊?why ?

这里我们要介绍下Oracle 11gR2 引入的HAIP特性,Oracle引入该特性的目的是为了通过自身的技术来实现心跳网络的冗余,而不再依赖于第三方技术,比如Linux的bond等等。

在Oracle 11.2.0.2版本之前,如果使用了OS级别的心跳网卡绑定,那么Oracle仍然以OS绑定的为准。从11.2.0.2开始,如果没有在OS层面进行心跳冗余的配置,那么Oracle自己的HAIP就启用了。所以你虽然设置的192.168.1.100,然而实际上Oracle使用是169.254这个网段。关于这一点,大家可以去看下alert log,从该日志都能看出来,这里不多说。

我们可以看到,正常节点能看到如下的169网段的ip,问题节点确实看不到这个169的网段IP:

Oracle MOS提供了一种解决方案,如下:

crsctl start res ora.cluster_interconnect.haip -init

经过测试,使用root进行操作,也是不行的。针对HAIP的无法启动,Oracle MOS文档说通常是如下几种情况:

1) 心跳网卡异常

2) 多播工作机制异常

3)防火墙等原因

4)Oracle bug

对于心跳网卡异常,如果只有一块心跳网卡,那么ping其他的ip就可以进行验证了,这一点很容易排除。

对于多播的问题,可以通过Oracle提供的mcasttest.pl脚本进行检测(请参考Grid Infrastructure Startup During Patching, Install or Upgrade May Fail Due to Multicasting Requirement (ID 1212703.1),我这里的检查结果如下:

|

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 |

$ ./mcasttest.pl -n rac02,rac01 -i en0 ########### Setup for node rac02 ########## Checking node access 'rac02' Checking node login 'rac02' Checking/Creating Directory /tmp/mcasttest for binary on node 'rac02' Distributing mcast2 binary to node 'rac02' ########### Setup for node rac01 ########## Checking node access 'rac01' Checking node login 'rac01' Checking/Creating Directory /tmp/mcasttest for binary on node 'rac01' Distributing mcast2 binary to node 'rac01' ########### testing Multicast on all nodes ########## Test for Multicast address 230.0.1.0 Aug 11 21:39:39 | Multicast Failed for en0 using address 230.0.1.0:42000 Test for Multicast address 224.0.0.251 Aug 11 21:40:09 | Multicast Failed for en0 using address 224.0.0.251:42001 $ |

虽然这里通过脚本检查,发现对于230和224网段都是不通的,然而这不见得一定说明是多播的问题导致的。虽然我们查看ocssd.log,通过搜索mcast关键可以看到相关的信息。

实际上,我在自己的11.2.0.3 Linux RAC环境中测试,即使mcasttest.pl测试不通,也可以正常启动CRS的。

由于网友这里是AIX,应该我就排除防火墙的问题了。因此最后怀疑Bug 9974223的可能性比较大。实际上,如果你去查询HAIP的相关信息,你会发现该特性其实存在不少的Oracle bug。

其中 for knowns HAIP issues in 11gR2/12c Grid Infrastructure (1640865.1)就记录12个HAIP相关的bug。

由于这里他的第1个节点无法操作,为了安全,是不能有太多的操作的。

对于HAIP,如果没有使用多心跳网卡的情况下,我觉得完全是可以禁止掉的。但是昨天查MOS文档,具体说不能disabled。最后测试发现其实是可以禁止掉的。如下是我的测试过程:

|

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 26 27 28 29 30 31 32 33 34 35 36 37 38 39 40 41 42 43 44 45 46 47 48 49 50 51 52 53 54 55 56 57 58 59 60 61 62 63 64 65 66 67 68 69 70 71 72 73 74 75 76 77 78 79 80 81 82 83 84 85 86 87 88 89 90 91 92 93 94 95 |

[root@rac1 bin]# ./crsctl modify res ora.cluster_interconnect.haip -attr "ENABLED=0" -init [root@rac1 bin]# ./crsctl stop crs CRS-2791: Starting shutdown of Oracle High Availability Services-managed resources on 'rac1' CRS-2673: Attempting to stop 'ora.crsd' on 'rac1' CRS-2790: Starting shutdown of Cluster Ready Services-managed resources on 'rac1' CRS-2673: Attempting to stop 'ora.oc4j' on 'rac1' CRS-2673: Attempting to stop 'ora.cvu' on 'rac1' CRS-2673: Attempting to stop 'ora.LISTENER_SCAN1.lsnr' on 'rac1' CRS-2673: Attempting to stop 'ora.GRID.dg' on 'rac1' CRS-2673: Attempting to stop 'ora.registry.acfs' on 'rac1' CRS-2673: Attempting to stop 'ora.rac1.vip' on 'rac1' CRS-2677: Stop of 'ora.rac1.vip' on 'rac1' succeeded CRS-2672: Attempting to start 'ora.rac1.vip' on 'rac2' CRS-2677: Stop of 'ora.LISTENER_SCAN1.lsnr' on 'rac1' succeeded CRS-2673: Attempting to stop 'ora.scan1.vip' on 'rac1' CRS-2677: Stop of 'ora.scan1.vip' on 'rac1' succeeded CRS-2672: Attempting to start 'ora.scan1.vip' on 'rac2' CRS-2676: Start of 'ora.rac1.vip' on 'rac2' succeeded CRS-2676: Start of 'ora.scan1.vip' on 'rac2' succeeded CRS-2672: Attempting to start 'ora.LISTENER_SCAN1.lsnr' on 'rac2' CRS-2676: Start of 'ora.LISTENER_SCAN1.lsnr' on 'rac2' succeeded CRS-2677: Stop of 'ora.registry.acfs' on 'rac1' succeeded CRS-2677: Stop of 'ora.oc4j' on 'rac1' succeeded CRS-2677: Stop of 'ora.cvu' on 'rac1' succeeded CRS-2677: Stop of 'ora.GRID.dg' on 'rac1' succeeded CRS-2673: Attempting to stop 'ora.asm' on 'rac1' CRS-2677: Stop of 'ora.asm' on 'rac1' succeeded CRS-2673: Attempting to stop 'ora.ons' on 'rac1' CRS-2677: Stop of 'ora.ons' on 'rac1' succeeded CRS-2673: Attempting to stop 'ora.net1.network' on 'rac1' CRS-2677: Stop of 'ora.net1.network' on 'rac1' succeeded CRS-2792: Shutdown of Cluster Ready Services-managed resources on 'rac1' has completed CRS-2677: Stop of 'ora.crsd' on 'rac1' succeeded CRS-2673: Attempting to stop 'ora.drivers.acfs' on 'rac1' CRS-2673: Attempting to stop 'ora.ctssd' on 'rac1' CRS-2673: Attempting to stop 'ora.evmd' on 'rac1' CRS-2673: Attempting to stop 'ora.asm' on 'rac1' CRS-2673: Attempting to stop 'ora.mdnsd' on 'rac1' CRS-2677: Stop of 'ora.mdnsd' on 'rac1' succeeded CRS-2677: Stop of 'ora.evmd' on 'rac1' succeeded CRS-2677: Stop of 'ora.ctssd' on 'rac1' succeeded CRS-2677: Stop of 'ora.asm' on 'rac1' succeeded CRS-2673: Attempting to stop 'ora.cluster_interconnect.haip' on 'rac1' CRS-2677: Stop of 'ora.cluster_interconnect.haip' on 'rac1' succeeded CRS-2673: Attempting to stop 'ora.cssd' on 'rac1' CRS-2677: Stop of 'ora.cssd' on 'rac1' succeeded CRS-2673: Attempting to stop 'ora.crf' on 'rac1' CRS-2677: Stop of 'ora.drivers.acfs' on 'rac1' succeeded CRS-2677: Stop of 'ora.crf' on 'rac1' succeeded CRS-2673: Attempting to stop 'ora.gipcd' on 'rac1' CRS-2677: Stop of 'ora.gipcd' on 'rac1' succeeded CRS-2673: Attempting to stop 'ora.gpnpd' on 'rac1' CRS-2677: Stop of 'ora.gpnpd' on 'rac1' succeeded CRS-2793: Shutdown of Oracle High Availability Services-managed resources on 'rac1' has completed CRS-4133: Oracle High Availability Services has been stopped. [root@rac1 bin]# ./crsctl start crs CRS-4123: Oracle High Availability Services has been started. [root@rac1 bin]# ./crsctl check crs CRS-4638: Oracle High Availability Services is online CRS-4537: Cluster Ready Services is online CRS-4529: Cluster Synchronization Services is online CRS-4533: Event Manager is online [root@rac1 bin]# ./crsctl stat res -t -init -------------------------------------------------------------------------------- NAME TARGET STATE SERVER STATE_DETAILS -------------------------------------------------------------------------------- Cluster Resources -------------------------------------------------------------------------------- ora.asm 1 ONLINE ONLINE rac1 Started ora.cluster_interconnect.haip 1 ONLINE OFFLINE ora.crf 1 ONLINE ONLINE rac1 ora.crsd 1 ONLINE ONLINE rac1 ora.cssd 1 ONLINE ONLINE rac1 ora.cssdmonitor 1 ONLINE ONLINE rac1 ora.ctssd 1 ONLINE ONLINE rac1 ACTIVE:0 ora.diskmon 1 OFFLINE OFFLINE ora.drivers.acfs 1 ONLINE ONLINE rac1 ora.evmd 1 ONLINE ONLINE rac1 ora.gipcd 1 ONLINE ONLINE rac1 ora.gpnpd 1 ONLINE ONLINE rac1 ora.mdnsd 1 ONLINE ONLINE rac1 [root@rac1 bin]# |

不过需要注意的是:当修改之后,两个节点都必须要重启CRS,否则仅仅重启一个节点的CRS是不行的,ASM实例是无法启动的。

对于HAIP异常,为什么会导致节点的CRS无法正常启动呢?关于这一点,我们来看下该资源的属性就知道了,如下:

|

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 26 27 28 29 30 31 32 33 34 35 36 37 38 39 |

NAME=ora.cluster_interconnect.haip TYPE=ora.haip.type ACL=owner:root:rw-,pgrp:oinstall:rw-,other::r--,user:oracle:r-x ACTION_FAILURE_TEMPLATE= ACTION_SCRIPT= ACTIVE_PLACEMENT=0 AGENT_FILENAME=%CRS_HOME%/bin/orarootagent%CRS_EXE_SUFFIX% AUTO_START=always CARDINALITY=1 CHECK_INTERVAL=30 DEFAULT_TEMPLATE= DEGREE=1 DESCRIPTION="Resource type for a Highly Available network IP" ENABLED=0 FAILOVER_DELAY=0 FAILURE_INTERVAL=0 FAILURE_THRESHOLD=0 HOSTING_MEMBERS= LOAD=1 LOGGING_LEVEL=1 NOT_RESTARTING_TEMPLATE= OFFLINE_CHECK_INTERVAL=0 PLACEMENT=balanced PROFILE_CHANGE_TEMPLATE= RESTART_ATTEMPTS=5 SCRIPT_TIMEOUT=60 SERVER_POOLS= START_DEPENDENCIES=hard(ora.gpnpd,ora.cssd)pullup(ora.cssd) START_TIMEOUT=60 STATE_CHANGE_TEMPLATE= STOP_DEPENDENCIES=hard(ora.cssd) STOP_TIMEOUT=0 UPTIME_THRESHOLD=1m USR_ORA_AUTO= USR_ORA_IF= USR_ORA_IF_GROUP=cluster_interconnect USR_ORA_IF_THRESHOLD=20 USR_ORA_NETMASK= USR_ORA_SUBNET= |

可以看到,如果该资源有异常,现在gpnpd,cssd都是有问题的。

备注:实际上还可以通过在asm 层面指定cluster_interconnects来避免haip的问题。

One Response to “HAIP异常,导致RAC节点无法启动的解决方案”

应该就是多播的问题,因为11.2.0.2是第一个HAIP的版本,仅仅是这个版本需要多播,从11.2.0.3开始已经放弃多播的方式来实现HAIP了,所以11.2.0.3上多播检测不通可以使用HAIP是正常的,而11.2.0.2上必须保证多播检测正常,否则肯定无法使用HAIP~

Leave a Reply

You must be logged in to post a comment.