一个55TB的Oracle RAC (ASM)恢复case

本站文章除注明转载外,均为本站原创: 转载自love wife love life —Roger的Oracle/MySQL/PostgreSQL数据恢复博客

本文链接地址: 一个55TB的Oracle RAC (ASM)恢复case

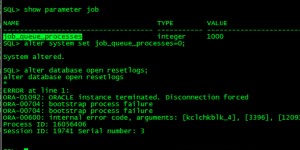

前几天某客户的一个数据库出现故障,需要我们紧急救援支持。了解了一下环境,着实也吓了一跳,数据量55TB左右。首先我们来看看故障的信息:

|

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 26 27 28 29 30 31 32 33 34 35 36 37 38 39 40 41 42 43 44 45 46 47 48 49 50 51 52 53 54 55 56 |

Fri Mar 25 22:57:10 2016 Errors in file /oracle/app/oracle/diag/rdbms/njsdb/njsdb1/trace/xxxx1_dia0_30474350.trc (incident=640081): ORA-32701: Possible hangs up to hang ID=80 detected Incident details in: /oracle/app/oracle/diag/rdbms/xxxx/xxxx1/incident/incdir_640081/xxxx1_dia0_30474350_i640081.trc DIA0 requesting termination of session sid:10347 with serial # 16419 (ospid:29884706) on instance 2 due to a LOCAL, HIGH confidence hang with ID=80. Hang Resolution Reason: Although the number of affected sessions did not justify automatic hang resolution initially, this previously ignored hang was automatically resolved. DIA0: Examine the alert log on instance 2 for session termination status of hang with ID=80. Fri Mar 25 22:59:26 2016 minact-scn: useg scan erroring out with error e:12751 Suspending MMON action 'Block Cleanout Optim, Undo Segment Scan' for 82800 seconds Fri Mar 25 22:59:35 2016 Sweep [inc][640081]: completed Sweep [inc2][640081]: completed Fri Mar 25 22:59:57 2016 Errors in file /oracle/app/oracle/diag/rdbms/xxxx/xxxx1/trace/xxxx1_dbw7_27263388.trc: ORA-27063: number of bytes read/written is incorrect IBM AIX RISC System/6000 Error: 78: Connection timed out Additional information: -1 Additional information: 8192 WARNING: Write Failed. group:3 disk:35 AU:241007 offset:262144 size:8192 Fri Mar 25 22:59:57 2016 Errors in file /oracle/app/oracle/diag/rdbms/xxxx/xxxx1/trace/xxxx1_ora_10289540.trc: ORA-27072: File I/O error IBM AIX RISC System/6000 Error: 78: Connection timed out Additional information: 7 Additional information: 41229344 Additional information: -1 WARNING: Read Failed. group:3 disk:35 AU:20131 offset:540672 size:8192 Errors in file /oracle/app/oracle/diag/rdbms/xxxx/xxxx1/trace/xxxx1_dbw7_27263388.trc: ORA-27063: number of bytes read/written is incorrect IBM AIX RISC System/6000 Error: 78: Connection timed out Additional information: -1 Additional information: 8192 WARNING: Write Failed. group:3 disk:35 AU:241007 offset:237568 size:8192 Fri Mar 25 22:59:57 2016 Errors in file /oracle/app/oracle/diag/rdbms/xxxx/xxxx1/trace/xxxx1_dbw3_30212292.trc: ORA-27063: number of bytes read/written is incorrect IBM AIX RISC System/6000 Error: 78: Connection timed out Additional information: -1 Additional information: 8192 。。。。。。 WARNING: failed to write mirror side 1 of virtual extent 5096 logical extent 0 of file 583 in group 3 on disk 35 allocation unit 241007 Errors in file /oracle/app/oracle/diag/rdbms/xxxx/xxxx1/trace/xxxx1_dbw3_30212292.trc: ORA-27063: number of bytes read/written is incorrect IBM AIX RISC System/6000 Error: 78: Connection timed out Additional information: -1 Additional information: 8192 。。。。。。 ORA-15080: synchronous I/O operation to a disk failed WARNING: failed to write mirror side 1 of virtual extent 5096 logical extent 0 of file 583 in group 3 on disk 35 allocation unit 241007 WARNING: failed to write mirror side 1 of virtual extent 5002 logical extent 0 of file 585 in group 3 on disk 35 allocation unit 242538 Errors in file /oracle/app/oracle/diag/rdbms/xxxx/xxxx1/trace/xxxx1_dbw3_30212292.trc: ORA-15080: synchronous I/O operation to a disk failed |

从前面的日志可以看出,该数据库节点从25号22:57开始报错,开始可能是出现了部分session hung的情况,接着出现了写失败的操作。而其中写失败的是第35个磁盘。

当然,这里仅仅是一个warning,因此我们还不能判断是磁盘是否有问题。

后面我们跟客户了解,当时的现象应该是存储链路出现了异常,导致数据库IO出现异常。这也符合之前的现象描述。

那么我们进一步分析后面客户的操作,看看之前他们都进行了哪些相关的操作?

|

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 26 27 28 29 30 31 32 33 34 35 |

Sat Mar 26 01:13:51 2016 ALTER DATABASE OPEN This instance was first to open Abort recovery for domain 0 Errors in file /oracle/app/oracle/diag/rdbms/xxxx/xxxx1/trace/xxxx1_ora_27853116.trc: ORA-01113: file 763 needs media recovery ORA-01110: data file 763: '+DATA/xxxx/datafile/ts_icp_bill.1028.881083603' ORA-1113 signalled during: ALTER DATABASE OPEN... Sat Mar 26 01:14:14 2016 ...... ...... Sat Mar 26 02:02:14 2016 ALTER DATABASE RECOVER database Media Recovery Start started logmerger process Sat Mar 26 02:02:18 2016 WARNING! Recovering data file 763 from a fuzzy backup. It might be an online backup taken without entering the begin backup command. 。。。。。 WARNING! Recovering data file 779 from a fuzzy backup. It might be an online backup taken without entering the begin backup command. Sat Mar 26 02:02:18 2016 。。。。。。 Sat Mar 26 02:04:15 2016 Errors in file /oracle/app/oracle/diag/rdbms/xxxx/xxxx1/trace/xxxx1_pr00_36110522.trc: ORA-01547: warning: RECOVER succeeded but OPEN RESETLOGS would get error below ORA-01194: file 1 needs more recovery to be consistent ORA-01110: data file 1: '+DATA/xxxx/datafile/system.261.880958693' Slave exiting with ORA-1547 exception Errors in file /oracle/app/oracle/diag/rdbms/xxxx/xxxx1/trace/xxxx1_pr00_36110522.trc: ORA-01547: warning: RECOVER succeeded but OPEN RESETLOGS would get error below ORA-01194: file 1 needs more recovery to be consistent ORA-01110: data file 1: '+DATA/xxxx/datafile/system.261.880958693' ORA-1547 signalled during: alter database recover cancel... Sat Mar 26 02:04:43 2016 |

我们可以看到,客户进行了正常的alter database open,但是Oracle提示有部分文件需要recover。那么进行recover database操作呢,则提示有部分文件可能来自fuzzy backup.

这是什么意思呢? 这其实是说这几个文件的检查点比较旧,需要很早之前的日志来进行recover。

由于这是一个非归档的数据库,因此很可能有问题的这几个文件需要日志已经被覆盖。

通过比较scn,我们可以判断这几个文件需要的redo信息已经被覆盖了。这里我要提醒大家的是,不要仅仅只看alert log就轻易下判断。

仅仅看alert log我们可能认为只有几个文件问题。后续我想,如果是仅仅有几个文件有问题,那么我跳过这部分文件进行recover 不就行了吗? 因为这样可以实现数据的最大程度恢复。

于是我执行了下面的命令:

|

1 2 3 4 |

run { set until scn 14586918070973; recover database skip forever tablespace ts_icp_bill,ts_icp_bill_idx,ts_wj_bill,ts_wj_bill_idx,ts_js_bill; } |

上面这个命令,其实是比较致命的,因为Oracle 会将这里skip的表空间里面的文件全部进行offline drop。

所以这里其实上述的做法是有些欠妥的。

我进一步根据文件的scn和v$log的scn 信息进行比较,发现其实有605个文件可能都需要进行recover;因为全库已经有2000个左右的数据文件。

这里我根据scn进行大致判断然后产生2个脚本进行文件级别的recover,大致获取脚本如下:

|

1 2 3 4 |

spool recover1.sh set pagesize 160 long 9999 select 'recover datafile '||file#||';' from v$datafile_header where checkpoint_change# < xxx; spool off |

通过将其他能够进行正常recover的文件进行恢复之后,尝试打开数据库。居然能够正常open数据库。有些人可能已经到此结束了吧,其实并不然。

大家想一下?虽然数据库打开了,我们不能正常recover的605个数据文件中可能还有部分数据文件状态是recover,也就是还不是online的状态。

这种情况之下,业务是无法访问的。实际上我这里查了一下,大概有540个文件状态仍然是recover。因此我们现在还需要想办法怎么去讲这部分文件online。

处理方法其实并不难,比如通过bbed简单修改下数据文件头的checkpoint信息,就可以完成了。但是有540个文件,而且都是ASM环境。

这个修改的工作量可想而已。后面再产生一个脚本,将数据库启动到mount状态,然后将之前状态为recover的文件全部online。

然后进行recover database using backup controlfile操作。接着直接进行alter database open resetlogs。

遗憾的是没有能够直接打开数据库,报了一个如下的错误,该错误很常见,mos有问题也提到,可能跟temp有关系。

这里我这里直接将tempfile 进行drop,然后再重建控制文件,进行recover后,居然直接打开数据库了。

检查alert log,我发现还存在一个如下的错误:

|

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 26 27 28 29 30 31 32 33 34 35 36 37 38 39 40 41 42 43 44 45 46 47 48 49 |

Recovery of Online Redo Log: Thread 1 Group 1 Seq 1 Reading mem 0 Mem# 0: +DATA/xxxx/onlinelog/group_1.2177.907527869 Mem# 1: +FRA/xxxx/onlinelog/group_1.263.907527881 Block recovery completed at rba 1.46.16, scn 3396.1209160607 ORACLE Instance xxxx1 (pid = 26) - Error 607 encountered while recovering transaction (12, 33) on object 143154. Errors in file /oracle/app/oracle/diag/rdbms/xxxx/xxxx1/trace/xxxx1_smon_10289168.trc: ORA-00607: Internal error occurred while making a change to a data block ORA-00600: internal error code, arguments: [6856], [0], [1], [], [], [], [], [], [], [], [], [] Sat Mar 26 19:05:43 2016 Dumping diagnostic data in directory=[cdmp_20160326190543], requested by (instance=1, osid=10289168 (SMON)), summary=[incident=2400209]. Starting background process QMNC Sat Mar 26 19:05:44 2016 QMNC started with pid=40, OS id=17432578 LOGSTDBY: Validating controlfile with logical metadata LOGSTDBY: Validation complete Sat Mar 26 19:05:45 2016 Sweep [inc][2400209]: completed Sweep [inc2][2400209]: completed Errors in file /oracle/app/oracle/diag/rdbms/xxxx/xxxx1/trace/xxxx1_smon_10289168.trc (incident=2400210): ORA-00600: internal error code, arguments: [6856], [0], [13], [], [], [], [], [], [], [], [], [] Incident details in: /oracle/app/oracle/diag/rdbms/xxxx/xxxx1/incident/incdir_2400210/xxxx1_smon_10289168_i2400210.trc Dumping diagnostic data in directory=[cdmp_20160326190545], requested by (instance=1, osid=10289168 (SMON)), summary=[abnormal process termination]. Starting background process CJQ0 Sat Mar 26 19:05:46 2016 CJQ0 started with pid=43, OS id=11010116 Sat Mar 26 19:05:47 2016 db_recovery_file_dest_size of 2047887 MB is 0.21% used. This is a user-specified limit on the amount of space that will be used by this database for recovery-related files, and does not reflect the amount of space available in the underlying filesystem or ASM diskgroup. Use ADRCI or Support Workbench to package the incident. See Note 411.1 at My Oracle Support for error and packaging details. Block recovery from logseq 1, block 60 to scn 14586918097855 Recovery of Online Redo Log: Thread 1 Group 1 Seq 1 Reading mem 0 Mem# 0: +DATA/xxxx/onlinelog/group_1.2177.907527869 Mem# 1: +FRA/xxxx/onlinelog/group_1.263.907527881 Dumping diagnostic data in directory=[cdmp_20160326190547], requested by (instance=1, osid=10289168 (SMON)), summary=[incident=2400210]. Block recovery completed at rba 1.91.16, scn 3396.1209160640 ORACLE Instance xxxx1 (pid = 26) - Error 607 encountered while recovering transaction (15, 3) on object 143247. Errors in file /oracle/app/oracle/diag/rdbms/xxxx/xxxx1/trace/xxxx1_smon_10289168.trc: ORA-00607: Internal error occurred while making a change to a data block ORA-00600: internal error code, arguments: [6856], [0], [13], [], [], [], [], [], [], [], [], [] Starting background process SMCO Dumping diagnostic data in directory=[cdmp_20160326190549], requested by (instance=1, osid=10289168 (SMON)), summary=[abnormal process termination]. Sat Mar 26 19:05:49 2016 SMCO started with pid=46, OS id=2949376 Setting Resource Manager plan SCHEDULER[0x3198]:DEFAULT_MAINTENANCE_PLAN via scheduler window Setting Resource Manager plan DEFAULT_MAINTENANCE_PLAN via parameter Sat Mar 26 19:05:49 2016 |

很明显,上述错误是指smon进程在进行事务恢复时,发现有2个事务无法进行恢复。

看到上述的错误,或许有人会说可能是undo出现损坏,导致无法进行事务恢复。实际上这里并不是,我通过dbv检查发现undo文件都是完好的。

无论怎讲,这里要解决这个问题,相对简单,定位到是什么对象,重建就好。

Leave a Reply

You must be logged in to post a comment.