关于Disk file operation IO的一个案例分析

本站文章除注明转载外,均为本站原创: 转载自love wife love life —Roger的Oracle/MySQL/PostgreSQL数据恢复博客

本文链接地址: 关于Disk file operation IO的一个案例分析

最近某银行新核心上线,我司同事连续奋战了大半年,真不容易。在此为大家的辛苦付出点赞。上线后,用户的EM监控突然来了一个告警;

最开始以为是个简单的问题,因此就简单查询了一下ash数据,发现主要是一个SQL语句上:

|

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 |

1151 3wybn83gkuc4k oracle@mccsdb01 (PPA7) Disk file operations I/O 8 FileOperation 8 filetype 2020-06-15 14:39 1151 3wybn83gkuc4k oracle@mccsdb01 (PPA7) Disk file operations I/O 8 FileOperation 8 filetype 2020-06-15 14:39 1151 3wybn83gkuc4k oracle@mccsdb01 (PPA7) Disk file operations I/O 8 FileOperation 8 filetype 2020-06-15 14:39 1151 3wybn83gkuc4k oracle@mccsdb01 (PPA7) Disk file operations I/O 8 FileOperation 8 filetype 2020-06-15 14:39 1151 3wybn83gkuc4k oracle@mccsdb01 (PPA7) Disk file operations I/O 8 FileOperation 8 filetype 2020-06-15 14:39 1151 3wybn83gkuc4k oracle@mccsdb01 (PPA7) Disk file operations I/O 8 FileOperation 8 filetype 2020-06-15 14:39 1151 3wybn83gkuc4k oracle@mccsdb01 (PPA7) Disk file operations I/O 8 FileOperation 8 filetype 2020-06-15 14:39 1151 3wybn83gkuc4k oracle@mccsdb01 (PPA7) Disk file operations I/O 8 FileOperation 8 filetype 2020-06-15 14:39 1151 3wybn83gkuc4k oracle@mccsdb01 (PPA7) Disk file operations I/O 8 FileOperation 8 filetype 2020-06-15 14:39 1151 3wybn83gkuc4k oracle@mccsdb01 (PPA7) Disk file operations I/O 8 FileOperation 8 filetype 2020-06-15 14:39 1151 3wybn83gkuc4k oracle@mccsdb01 (PPA7) Disk file operations I/O 8 FileOperation 8 filetype 2020-06-15 14:39 1151 3wybn83gkuc4k oracle@mccsdb01 (PPA7) Disk file operations I/O 8 FileOperation 8 filetype 2020-06-15 14:39 1151 3wybn83gkuc4k oracle@mccsdb01 (PPA7) Disk file operations I/O 8 FileOperation 8 filetype 2020-06-15 14:39 1151 3wybn83gkuc4k oracle@mccsdb01 (PPA7) Disk file operations I/O 8 FileOperation 8 filetype 2020-06-15 14:39 1151 3wybn83gkuc4k oracle@mccsdb01 (PPA7) Disk file operations I/O 8 FileOperation 8 filetype 2020-06-15 14:39 1151 3wybn83gkuc4k oracle@mccsdb01 (PPA7) Disk file operations I/O 8 FileOperation 8 filetype 2020-06-15 14:39 2007 rman@mccsdb01 (TNS V1-V3) Disk file operations I/O 8 FileOperation 8 filetype 2020-06-15 14:47 2853 rman@mccsdb01 (TNS V1-V3) Disk file operations I/O 5 FileOperation 1 filetype 2020-06-15 14:50 103 rman@mccsdb01 (TNS V1-V3) Disk file operations I/O 8 FileOperation 8 filetype 2020-06-15 15:00 1626 rman@mccsdb01 (TNS V1-V3) Disk file operations I/O 8 FileOperation 8 filetype 2020-06-15 15:02 |

该SQL的代码如下:

|

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 |

SELECT TO_CHAR(current_timestamp AT TIME ZONE 'GMT', 'YYYY-MM-DD HH24:MI:SS TZD') AS curr_timestamp, SUM(failed_count) AS failed_count, TO_CHAR(MIN(first_occur_time), 'yyyy-mm-dd hh24:mi:ss') AS first_occur_time, TO_CHAR(MAX(last_occur_time), 'yyyy-mm-dd hh24:mi:ss') AS last_occur_time FROM (SELECT COUNT(db_user) AS failed_count, MIN(extended_timestamp) AS first_occur_time, MAX(extended_timestamp) AS last_occur_time FROM sys.dba_common_audit_trail WHERE action BETWEEN 100 AND 102 AND returncode != 0 AND STATEMENT_TYPE = 'LOGON' AND extended_timestamp >= current_timestamp - TO_DSINTERVAL('0 0:30:00') UNION SELECT COUNT(DBUSERNAME) AS failed_count, MIN(event_timestamp) AS first_occur_time, MAX(event_timestamp) AS last_occur_time FROM unified_audit_trail WHERE ACTION_NAME = 'LOGON' and return_code <> 0 AND event_timestamp >= current_timestamp - TO_DSINTERVAL('0 0:30:00')) |

从代码来看,这是一个跟审计相关的SQL语句。第一直觉是这个SQL执行效率可能不高;但是其出现的等待事件是IO相关。因此用户非常关系是不是系统IO有问题?如何判断IO是否有问题呢?其实我们可以通过脚本发现查询相关event的平均IO等待事件时间即可:

|

1 2 3 4 5 6 7 8 9 10 11 |

----and SAMPLE_TIME = to_Date('2020-06-15 15:09','yyyy-mm-dd hh24:mi') ---group byinst_id,event,to_char(SAMPLE_TIME,'yyyy-mm-dd hh24:mi'),p1,p3 order by 1,3,5; BTIME EVENT_NAME AVG_MS CT ------------------------ ------------------------------ --------- ------------ 15-JUN-20 14:30 Disk file operations I/O 19.42 1,293,197 15-JUN-20 15:00 Disk file operations I/O .30 43 15-JUN-20 15:00 Disk file operations I/O 19.53 1,294,049 15-JUN-20 15:00 db file sequential read .40 668,462 SYS@mccs1> |

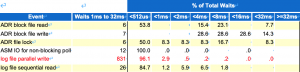

如果说上述信息还不足以证明的话,那么其实可以查看awr报告的直方图信息:

我们知道Oracle为了确保安全性,实现的是日志写优先原则;每一笔事务的提交,Oracle lgwr进程都必须将对应的日志刷新到磁盘;因此可以通过lgwr 进程的写操作来判断数据库主机到存储之间的链路和存储IO是否正常。很明显,从上述图表来看,lgwr file parallel write没有超过8ms的等待。因此可以排除链路和存储的问题。

最开始由于怀疑是SQL问题,尝试收集了几个表的统计信息之后,运行了一下SQL,都在秒级能够返回结果,以为解决了问题。没想到,第二天监控又爆出来了。仍然是相同的问题告警。

这是我再次回馈到分析这个sql的执行计划,该执行计划如下所示:

|

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 26 27 28 29 30 31 32 33 34 35 36 37 38 39 40 41 42 43 44 45 46 47 48 49 50 51 52 53 54 55 56 |

Id Operation Name Rows Bytes Cost (%CPU) Time Pstart Pstop TQ IN-OUT PQ Distrib 0 SELECT STATEMENT 22 (100) 1 SORT AGGREGATE 1 57 2 VIEW 2 114 22 (10) 00:00:01 3 SORT UNIQUE 2 167 22 (10) 00:00:01 4 UNION-ALL 5 SORT AGGREGATE 1 88 10 (10) 00:00:01 6 VIEW DBA_COMMON_AUDIT_TRAIL 3 264 9 (0) 00:00:01 7 UNION-ALL 8 NESTED LOOPS OUTER 1 165 5 (0) 00:00:01 9 NESTED LOOPS OUTER 1 161 4 (0) 00:00:01 10 NESTED LOOPS OUTER 1 156 3 (0) 00:00:01 11 NESTED LOOPS 1 151 2 (0) 00:00:01 12 TABLE ACCESS FULL AUD$ 1 131 2 (0) 00:00:01 13 INDEX UNIQUE SCAN I_AUDIT_ACTIONS 1 20 0 (0) 14 INDEX RANGE SCAN I_SYSTEM_PRIVILEGE_MAP 1 5 1 (0) 00:00:01 15 INDEX RANGE SCAN I_SYSTEM_PRIVILEGE_MAP 1 5 1 (0) 00:00:01 16 INDEX RANGE SCAN I_STMT_AUDIT_OPTION_MAP 1 4 1 (0) 00:00:01 17 NESTED LOOPS OUTER 1 187 4 (0) 00:00:01 18 NESTED LOOPS OUTER 1 183 3 (0) 00:00:01 19 NESTED LOOPS OUTER 1 178 2 (0) 00:00:01 20 FILTER 21 NESTED LOOPS OUTER 1 173 1 (0) 00:00:01 22 PX COORDINATOR 23 PX SEND QC (RANDOM) :TQ10000 1 153 Q1,00 P->S QC (RAND) 24 VIEW GV$XML_AUDIT_TRAIL Q1,00 PCWP 25 FIXED TABLE FULL X$XML_AUDIT_TRAIL 1 153 Q1,00 PCWP 26 INDEX RANGE SCAN I_AUDIT_ACTIONS 1 20 1 (0) 00:00:01 27 INDEX RANGE SCAN I_SYSTEM_PRIVILEGE_MAP 1 5 1 (0) 00:00:01 28 INDEX RANGE SCAN I_SYSTEM_PRIVILEGE_MAP 1 5 1 (0) 00:00:01 29 INDEX RANGE SCAN I_STMT_AUDIT_OPTION_MAP 1 4 1 (0) 00:00:01 30 FILTER 31 TABLE ACCESS FULL FGA_LOG$ 1 92 2 (0) 00:00:01 32 SORT AGGREGATE 1 79 12 (9) 00:00:01 33 VIEW UNIFIED_AUDIT_TRAIL 2 158 11 (0) 00:00:01 34 UNION-ALL 35 NESTED LOOPS OUTER 1 186 3 (0) 00:00:01 36 NESTED LOOPS OUTER 1 182 2 (0) 00:00:01 37 NESTED LOOPS 1 177 1 (0) 00:00:01 38 PX COORDINATOR 39 PX SEND QC (RANDOM) :TQ20000 1 153 Q2,00 P->S QC (RAND) 40 VIEW GV$UNIFIED_AUDIT_TRAIL Q2,00 PCWP 41 FIXED TABLE FULL X$UNIFIED_AUDIT_TRAIL 1 153 Q2,00 PCWP 42 TABLE ACCESS BY INDEX ROWID ALL_UNIFIED_AUDIT_ACTIONS 1 24 1 (0) 00:00:01 43 INDEX UNIQUE SCAN I_UNIFIED_AUDIT_ACTIONS 1 0 (0) 44 INDEX RANGE SCAN I_SYSTEM_PRIVILEGE_MAP 1 5 1 (0) 00:00:01 45 INDEX RANGE SCAN I_STMT_AUDIT_OPTION_MAP 1 4 1 (0) 00:00:01 46 TABLE ACCESS BY INDEX ROWID BATCHED ALL_UNIFIED_AUDIT_ACTIONS 1 24 1 (0) 00:00:01 47 NESTED LOOPS 1 63 8 (0) 00:00:01 48 NESTED LOOPS OUTER 1 39 7 (0) 00:00:01 49 NESTED LOOPS OUTER 1 35 6 (0) 00:00:01 50 PARTITION RANGE ALL 1 30 5 (0) 00:00:01 1 1048575 51 TABLE ACCESS FULL AUD$UNIFIED 1 30 5 (0) 00:00:01 1 1048575 52 INDEX RANGE SCAN I_SYSTEM_PRIVILEGE_MAP 1 5 1 (0) 00:00:01 53 INDEX RANGE SCAN I_STMT_AUDIT_OPTION_MAP 1 4 1 (0) 00:00:01 54 INDEX UNIQUE SCAN I_UNIFIED_AUDIT_ACTIONS 1 0 (0) |

再次回归到分析SQL执行计划,我们发现该SQL的视图中访问的几个表在之前都进行了检查,数据量非常小,而且执行计划本身并没有问题。唯一的可能疑点是对于X$XML_AUDIT_TRAIL 的访问。在之前的分析中,由于检查数据库审计设置,发现已关闭了数据库审计,因此忽略了这个疑点。

通过进行单次多次测试发现,基于X$XML_AUDIT_TRAIL的访问时非常慢的;而且每次访问都会出现Disk file operation IO等待。如下所示:

|

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 |

SESSION_ID SQL_ID PROGRAM EVENT P1 P1TEXT P3 P3TEXT TO_CHAR(SAMPLE_T ---------- ------------- ------------------------- ------------------------- --- ------------------- --------- ---------------- 1151 82x1cyrkcg1jd oracle@mccsdb01 (PPA7) Disk file operations I/O 8 FileOperation 8 filetype 2020-06-16 12:18 1713 d0k3ahnmyd2a2 sqlplus@mccsdb01 (TNS V1- Disk file operations I/O 8 FileOperation 8 filetype 2020-06-16 12:16 V3) 1713 d0k3ahnmyd2a2 sqlplus@mccsdb01 (TNS V1- Disk file operations I/O 8 FileOperation 8 filetype 2020-06-16 12:16 V3) 1713 d0k3ahnmyd2a2 sqlplus@mccsdb01 (TNS V1- Disk file operations I/O 8 FileOperation 8 filetype 2020-06-16 12:16 V3) 1151 3wybn83gkuc4k oracle@mccsdb01 (PPA7) Disk file operations I/O 8 FileOperation 8 filetype 2020-06-16 12:07 1151 5g51s485pqu8f oracle@mccsdb01 (PPA7) Disk file operations I/O 8 FileOperation 8 filetype 2020-06-16 11:49 1151 3wybn83gkuc4k oracle@mccsdb01 (PPA7) Disk file operations I/O 8 FileOperation 8 filetype 2020-06-16 11:37 479 rman@mccsdb01 (TNS V1-V3) Disk file operations I/O 8 FileOperation 8 filetype 2020-06-16 11:23 10 rman@mccsdb01 (TNS V1-V3) Disk file operations I/O 8 FileOperation 8 filetype 2020-06-16 11:21 479 rman@mccsdb01 (TNS V1-V3) Disk file operations I/O 8 FileOperation 8 filetype 2020-06-16 11:17 1151 3wybn83gkuc4k oracle@mccsdb01 (PPA7) Disk file operations I/O 8 FileOperation 8 filetype 2020-06-16 11:07 1713 rman@mccsdb01 (TNS V1-V3) Disk file operations I/O 8 FileOperation 8 filetype 2020-06-16 10:45 |

而上述的d0k3ahnmyd2a2 则是我的测试SQL语句:

|

1 2 3 4 5 6 7 8 |

SYS@mccs1>select sql_text from v$sql where sql_id='d0k3ahnmyd2a2'; SQL_TEXT ------------------------------------------------------------------------------------ select count(1) from X$XML_AUDIT_TRAIL Elapsed: 00:00:00.01 SYS@mccs1> |

从验证来看,看来问题出现这个xml的表上。我们知道Disk file operation IO等待表示的对于文件的操作,比如open,close等。而且前面查询event的operation type都是8,表明确实在等待IO。那么这里的IO是不是物理IO呢?

|

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 |

Stat Name Statement Total Per Execution % Snap Total Elapsed Time (ms) 343,319 171,659.47 9.77 CPU Time (ms) 1,140 569.81 0.27 Executions 2 1.00 0.00 Buffer Gets 34 17.00 0.00 Disk Reads 0 0.00 0.00 Parse Calls 6 3.00 0.01 Rows 2 1.00 User I/O Wait Time (ms) 343,298 171,648.94 94.03 Cluster Wait Time (ms) 0 0.00 0.00 Application Wait Time (ms) 0 0.00 0.00 Concurrency Wait Time (ms) 0 0.00 0.00 Invalidations 0 Version Count 4 Sharable Mem(KB) 804 |

从上面的SQL report来看,其实该SQL并没有产生物理读。都没有产生物理IO,为什么是IO相关的等待呢?这就奇怪了。。。

那么唯一的解释就是该SQL可能会访问系统的文件,因为用到了xml。联想到这一层,那么怎么验证这个猜想呢?这里我分别开启2个crt窗口进行测试跟踪即可:

++Session 1

|

1 2 3 4 5 6 7 8 9 10 |

SYS@mccs1>c/9/8 1* select count(8) from X$XML_AUDIT_TRAIL SYS@mccs1>/ COUNT(8) ---------- 0 Elapsed: 00:00:08.20 SYS@mccs1> |

可以看到该SQL查询花费了8.2秒。

+++Session2

|

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 26 27 28 29 30 31 32 33 34 35 36 37 38 39 40 41 42 43 44 45 46 47 48 49 50 51 52 53 54 55 56 57 58 59 60 61 62 63 64 |

Tue Jun 16 13:06:51 2020 0.0000: kread(7, "\0\0\01506\0\0\0\0\00305".., 8208) (sleeping...) 0.0000: kread(7, "\0\0019006\0\0\0\0\011 i".., 8208) = 400 7.3600: _poll(0x072000000B4E1518, 3, 0) = 0 7.3611: statx("/usr/share/lib/zoneinfo/Asia/Shanghai", 0x0FFFFFFFFFFF68F0, 176, 0) = 0 7.3613: statx("/u01/app/oracle/admin/mccs/adump/adx_mccs1_ora_29360546.txt", 0x0FFFFFFFFFFF3AD0, 176, 010) Err#2 ENOENT 7.3615: statx("/u01/app/oracle/admin/mccs/adump/adx_mccs1_ora_29360546.txt", 0x0FFFFFFFFFFF3A10, 176, 010) Err#2 ENOENT 7.3616: statx("/u01/app/oracle/admin/mccs/adump/adx_mccs1_ora_29360546.txt", 0x0FFFFFFFFFFF3440, 176, 010) Err#2 ENOENT 7.3618: statx("/u01/app/oracle/admin/mccs/adump/", 0x0FFFFFFFFFFF2098, 176, 010) = 0 7.3620: statfs("/u01/app/oracle/admin/mccs/adump/adx_mccs1_ora_29360546.txt", 0x0FFFFFFFFFFF1F10) Err#2 ENOENT 7.3622: statfs("/u01/app/oracle/admin/mccs/adump/", 0x0FFFFFFFFFFF1F10) = 0 7.3623: mntctl(2, 512, "") = 0 7.3625: mntctl(2, 1888, "") = 13 7.3627: kopen("/etc/vfs", O_RDONLY) = 11 7.3628: kioctl(11, 22528, 0x0000000000000000, 0x0000000000000000) Err#25 ENOTTY 7.3630: kioctl(11, 22528, 0x0000000000000000, 0x0000000000000000) Err#25 ENOTTY 7.3631: kread(11, " # @ ( # ) 7 7\t 1 . 3".., 4096) = 1786 7.3633: close(11) = 0 7.3634: kopen("/u01/app/oracle/admin/mccs/adump/adx_mccs1_ora_29360546.txt", O_WRONLY|O_CREAT|O_TRUNC|O_LARGEFILE, S_IRUSR|S_IWUSR|S_IRGRP|S_IWGRP) = 11 7.3641: fstatx(11, 0x0FFFFFFFFFFF1F10, 176, 010) = 0 7.3642: close(11) = 0 7.3644: statx("/u01/app/oracle/admin/mccs/adump/adx_mccs1_ora_29360546.txt", 0x0FFFFFFFFFFF3580, 176, 010) = 0 7.3646: statx("/u01/app/oracle/admin/mccs/adump/", 0x0FFFFFFFFFFF21D8, 176, 010) = 0 7.3647: statfs("/u01/app/oracle/admin/mccs/adump/adx_mccs1_ora_29360546.txt", 0x0FFFFFFFFFFF2050) = 0 7.3649: mntctl(2, 512, "") = 0 7.3650: mntctl(2, 1888, "") = 13 7.3652: kopen("/etc/vfs", O_RDONLY) = 11 7.3654: kioctl(11, 22528, 0x0000000000000000, 0x0000000000000000) Err#25 ENOTTY ...... 7.4088: close(12) = 0 7.4089: chdir("adump/") = 0 7.4090: statx(".", 0x0FFFFFFFFFFED580, 176, 010) = 0 7.4092: kopen(".", O_RDONLY|O_LARGEFILE) = 12 7.4093: getdirent64(12, 0x0000000121328E70, 4096) = 4056 7.4095: klseek(12, 0, 0, 0x0FFFFFFFFFFED480) = 0 7.4096: kfcntl(12, F_GETFD, 0x0000000120C4FFD8) = 0 7.4098: kfcntl(12, F_SETFD, 0x0000000000000001) = 0 7.4099: getdirent64(12, 0x0000000121328E70, 4096) = 4056 ...... 15.5541: getdirent64(12, 0x0000000121328E70, 4096) = 4032 15.5542: getdirent64(12, 0x0000000121328E70, 4096) = 4032 15.5544: getdirent64(12, 0x0000000121328E70, 4096) = 4032 15.5546: getdirent64(12, 0x0000000121328E70, 4096) = 4032 15.5547: getdirent64(12, 0x0000000121328E70, 4096) = 4032 15.5549: getdirent64(12, 0x0000000121328E70, 4096) = 4032 15.5551: getdirent64(12, 0x0000000121328E70, 4096) = 4032 15.5552: getdirent64(12, 0x0000000121328E70, 4096) = 936 15.5554: getdirent64(12, 0x0000000121328E70, 4096) = 0 15.5555: close(12) = 0 15.5557: chdir("/u01/app/oracle/admin/mccs") = 0 15.5559: chdir("/u01/app/oracle/admin") = 0 15.5560: chdir("/u01/app/oracle") = 0 15.5562: chdir("/u01/app") = 0 15.5563: chdir("/u01") = 0 15.5564: chdir("/") = 0 15.5566: chdir("/u01/app/oracle/product/19c/dbhome_1/dbs") = 0 15.5568: close(11) = 0 15.5571: _poll(0x072000000B4E1518, 3, 0) = 0 15.5572: statx("/u01/app/oracle/admin/mccs/adump/adx_mccs1_ora_29360546.txt", 0x0FFFFFFFFFFF44A0, 176, 011) = 0 15.5574: unlink("/u01/app/oracle/admin/mccs/adump/adx_mccs1_ora_29360546.txt") = 0 15.5586: kwrite(10, "\0\0018506\0\0\0\0\010\0".., 389) = 389 15.5588: kread(7, "\0\0\01506\0\0\0\0\00305".., 8208) = 21 15.5589: kwrite(10, "\0\0\0 0\0\0\0\004\0".., 180) = 180 17.5592: kread(7, "\0\0\01506\0\0\0\0\00305".., 8208) (sleeping...) |

从上的跟踪信息来看;我们的测试SQL语句执行时间为8.2秒,其中从_poll函数开始到close(12)操作截止,就消耗了8.19秒。其中getdirent64函数操作就消耗了8.14秒。该函数表现获取文件系统目录和文件信息;同时从close的句柄操作来看,主要是慢在/adump目录的操作上。这也难怪上述SQL执行为什么会这么慢了。而且每次执行必然会出现Disk file operation IO等待。

进一步检查/adump目录发现,该目录下的小文件居然高达263万个。

|

1 2 |

[oracle@xxxxdb01:/u01/app/oracle/admin/xxxx/adump]$ls|wc -l 2634022 |

到这里问题基本上就非常清晰了。只要清理这个目录的垃圾文件即可。

那么问题就来了,数据库都已经关闭了审计,为什么还会产生这么多文件呢?实际上大概估算一下,该系统从去年安装部署后至今,大概也就200多天;也就是说该目录平均每天增加1万多个小文件。其实这是因为Oracle数据库默认情况下仍然会审计sys的登陆操作。其他业务用户的审计操作均已关闭。

换句话讲,也就是说该系统的sys用户登陆每天高达1w+次。这是什么神操作呢 ?

最好检查crontab发现是用户有一个清理日志的脚本,忘记注释了,而该脚本几乎是每秒登陆一次(实际上大概是2-3秒)。也就就解释了为什么每天产生12000个左右的文件了。

Leave a Reply

You must be logged in to post a comment.